That feeling when you see some awful code, and then realise you wrote it. Here's a story of how some bad code I wrote in a unit test, made it into production and caused a memory leak in a NodeJS application.

A project that I work on started showing crashed Pods in our Kubernetes (K8s) cluster, which runs a NodeJS server in a container, it was failing with a dreaded "OOMKilled" (Out of memory killed) error, which sent me down the path of learning about profiling NodeJS applications for memory leaks.

If you don't know much about Kubernetes, that doesn't matter for this article, the fact the NodeJS application is running in K8s is incidental to the tale, and I will only mention it briefly when discussing debugging in Kubernetes.

I learnt a lot through investigating this memory leak, not just about memory profiling, but about the risk of writing "quick and dirty code", even if it's not for production use.

Here is an obfuscated and simplified explanation of the terrible, terrible code that I originally wrote.

The bad code

So, a few months ago I wrote some code some unit tests which exercised some file validation logic. When I wrote this code I needed to get access to the supported file extensions for the validator, for doing some checks, which lived somewhere else, so I very lazily dumped the file extensions onto an object that I was processing in the test, just for test purposes, right? it'll be fine.

My simplified version of the production module:

const supportedValidators = ["name"];

module.exports = {

validators: () => {

return supportedValidators.map((validator) => {

// imports ./validators/name.js what name.js does is not important.

return require(`./validators/${validator}.js`);

});

},

};What's happening here is a function is exported which exposes other modules via a dynamic require statement. This dynamic require statement is very important to the memory leak and i'll come back to it.

As an aside using require like this in the code is blocking, so it might not be the best thing to use. For more modern code we could use

import()in ES Module code.

Now for the bad code, I had in my unit test:

const { validators } = require("./validate");

const badFunction = () => {

const myValidators = validators();

myValidators.map((validator) => {

// mutating someone elses object, yeah i know, this is super bad.

if (!validator.supportedExtensions) {

validator.supportedExtensions = [];

}

// the code didn't do this exactly this is demo

validator.supportedExtensions.push(".pdf");

});

};This code is terrible for so many reasons, but it was only for unit tests. This code looks weird, I know, but in context of what I used this for in our tests does make sense, I promise, but this simplified sample is to demonstrate the memory leak.

Unfortunately, only for unit tests ended up not being true, and here is my first learning from this experience:

Any code that you write anywhere, including inside of unit tests, could be copied by any well meaning developer adapting the code for their use case.

This happened, and unfortunately the "just for test" code with the supportedExtensions = [] and .push(".pdf") came along for the ride, even though it wasn't needed at all for the production use case.

Why is this a memory leak?

So on the face of it if you were to look at the myValidators.map code it doesn't look like I'm holding any references to the supportedExtensions object once badFunction its finished. But that assumption ignores that the Array, or the contents of it, that validators() supplies could be retained in memory forever.

Objects in Memory are available for Garbage collection when there are no references to the object anymore and nothing is holding on to those objects. This is a massive over-simplification, I'll link a great talk later in this article which describes it much better than I ever could.

Do you remember this line of code?

return require(`./validators/${validator}.js`);A NodeJS module is being imported into the current scope, the important thing about NodeJs modules though is that:

No matter how many times you import a module you always get the a reference to the same module object, so:

const foo = require("./foo");

const foo2 = require("./foo2");

foo === foo2; // trueThis means that even if we lose all references to the imported module, it wont be garbage collected.

In this case, this is extremely problematic because validator.supportedExtensions.push(".pdf"); will add a new entry to an array, that lives on this module object forever, every time the function is called. Imagine if this was on an REST API call (it was). Yikes.

A couple more learnings here:

- Mutating objects in an array someone else passed to you is dangerous, you have no idea what references are held to that object, your modifications may never be garbage collected.

- If you do have to put bad non-production code in, put a massive comment around it warning future developers, not to copy it.

- Avoid changing objects you don't control.

On a somewhat related note to the learnings above, Jake Archibald recently wrote about the risks of calling methods with potentially non-future proof parameters, it's a good read: https://jakearchibald.com/2021/function-callback-risks/.

Memory profiling and finding the problem code

When I first realised that we might have a Memory leak I first wanted to make sure I knew what tools where at my disposal,

I headed to YouTube and found this great video on the topic.

If you open the Developer Tools when you have a node --inspect process running you should notice a new Node logo, like the one shown below:

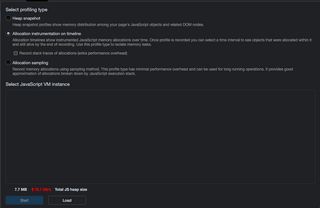

When you activate this mode, a new window will open where one of the tabs in the new window is "Memory".

To try and find the issue I selected the option "Allocation instrumentation timeline", I then proceeded to run our application tests which had caused the original "OOMKilled" on our cluster. After these tests ran I stopped the instrumentation and proceeded to sort through the results.

I found that sorting by size and then searching the largest allocated objects helped me find the problem.

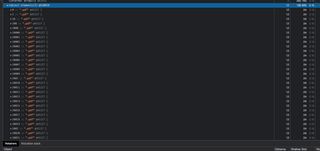

Sure enough after filtering through a lot of noise I found something like this:

Luckily for me, I know our codebase quite well and was able to identify the problem area based on the variable name of the large array and also the array contents, which is where to my shame I found my awful code being used inside of an API call.

Memory profile in Kubernetes

Memory profiling in Kubernetes is quite similar to when you are running locally, you need to end up with a server on your machine exposing a debugging session.

Recommendations:

- Scale your deployment down to 1 replica.

- Edit your deployment so your NodeJS server sets the

--inspectflag - Disable liveness and readiness probes for the container, otherwise K8s may kill your session whilst debugging.

- Increase your memory limits and requests, profiling can take up to 2x more memory than usual.

- Locate the pod you want to debug and run

kubectl port-forward pod-name 9229this will result in the same debugging session as earlier running on your machine, forwarded from the K8s cluster.

Reproducing the memory leak with demo code

If you want to have a play reproducing the memory leak you could do this:

- Create the following folder and file:

validators/name.jsCan be empty module exports:

module.exports = {};- Create

validate.js

const supportedValidators = ["name"];

module.exports = {

validators: () => {

return supportedValidators.map((validator) => {

return require(`./validators/${validator}.js`);

});

},

};- Create

bad-code.js

const { validators } = require("./validate");

const badFunction = () => {

const myValidators = validators();

myValidators.map((validator) => {

if (!validator.supportedExtensions) {

validator.supportedExtensions = [];

}

// the code didnt do this exactly this is demo

validator.supportedExtensions.push(".pdf");

});

};

let index = 0;

setInterval(() => {

// even though theres no references to myValidators array

// there is a memory leak with the .push

badFunction();

index++;

console.log(`Running bad code cycle: ${index}`);

}, 0);- Run

node --inspect bad-code.jsYou will need NodeJS installed https://nodejs.org. - Open Chrome developer tools, you should see the NodeJS logo which says "Open dedicated DevTools for NodeJS"

- Run the profiling techniques as described in the previous section.

Learnings

I learnt a lot through this experience.

- Avoid writing sloppy code, even if it is non-production.

- Mutating data that is passed to you is dangerous, you have no idea what references are held to that object, your modifications may never be garbage collected.

- How to Memory profile in NodeJS

I hope this was a good read, if only to laugh at my terrible code, if you feel like reading more of my ramblings, please follow me on Twitter @griffadev.